General Fusion Takes Aim at Practical Fusion Power

On the lookout to this sort of specialised anxious devices as a design for artificial intelligence could demonstrate just as useful, if not more so, than learning the human brain. Take into account the brains of those people ants in your pantry. Each has some 250,000 neurons. Larger bugs have closer to 1 million. In my investigation at Sandia National Laboratories in Albuquerque, I research the brains of just one of these greater bugs, the dragonfly. I and my colleagues at Sandia, a nationwide-stability laboratory, hope to just take advantage of these insects’ specializations to style and design computing devices optimized for jobs like intercepting an incoming missile or adhering to an odor plume. By harnessing the pace, simplicity, and effectiveness of the dragonfly anxious process, we goal to style and design personal computers that complete these features more rapidly and at a fraction of the electrical power that conventional devices consume.

On the lookout to a dragonfly as a harbinger of long run computer system devices could seem to be counterintuitive. The developments in artificial intelligence and machine understanding that make information are usually algorithms that mimic human intelligence or even surpass people’s capabilities. Neural networks can by now complete as well—if not better—than persons at some precise jobs, this sort of as detecting most cancers in health care scans. And the opportunity of these neural networks stretches significantly outside of visible processing. The computer system system AlphaZero, qualified by self-engage in, is the ideal Go player in the earth. Its sibling AI, AlphaStar, ranks among the ideal Starcraft II gamers.

This kind of feats, having said that, come at a cost. Producing these advanced devices calls for significant quantities of processing electrical power, frequently accessible only to select establishments with the fastest supercomputers and the methods to assist them. And the power cost is off-placing.

New estimates propose that the carbon emissions ensuing from building and coaching a organic-language processing algorithm are larger than those people developed by four vehicles about their lifetimes.

It can take the dragonfly only about fifty milliseconds to begin to respond to a prey’s maneuver. If we believe 10 ms for cells in the eye to detect and transmit details about the prey, and an additional five ms for muscle groups to start producing force, this leaves only 35 ms for the neural circuitry to make its calculations. Given that it usually can take a one neuron at minimum 10 ms to combine inputs, the underlying neural network can be at minimum a few layers deep.

But does an artificial neural network truly want to be significant and elaborate to be handy? I believe it doesn’t. To enjoy the benefits of neural-influenced personal computers in the close to phrase, we ought to strike a harmony involving simplicity and sophistication.

Which delivers me back to the dragonfly, an animal with a brain that could provide specifically the proper harmony for certain applications.

If you have ever encountered a dragonfly, you by now know how quickly these beautiful creatures can zoom, and you have seen their unbelievable agility in the air. Maybe much less clear from relaxed observation is their superb looking capability: Dragonflies efficiently capture up to ninety five % of the prey they pursue, having hundreds of mosquitoes in a working day.

The bodily prowess of the dragonfly has unquestionably not gone unnoticed. For a long time, U.S. organizations have experimented with making use of dragonfly-influenced designs for surveillance drones. Now it is time to transform our attention to the brain that controls this little looking machine.

While dragonflies could not be able to engage in strategic video games like Go, a dragonfly does reveal a kind of technique in the way it aims in advance of its prey’s existing place to intercept its supper. This can take calculations carried out really fast—it usually can take a dragonfly just fifty milliseconds to start turning in reaction to a prey’s maneuver. It does this while tracking the angle involving its head and its physique, so that it is familiar with which wings to flap more rapidly to transform in advance of the prey. And it also tracks its individual movements, mainly because as the dragonfly turns, the prey will also surface to go.

The design dragonfly reorients in reaction to the prey’s turning. The smaller sized black circle is the dragonfly’s head, held at its original situation. The good black line signifies the path of the dragonfly’s flight the dotted blue lines are the aircraft of the design dragonfly’s eye. The pink star is the prey’s situation relative to the dragonfly, with the dotted pink line indicating the dragonfly’s line of sight.

So the dragonfly’s brain is undertaking a amazing feat, presented that the time needed for a one neuron to include up all its inputs—called its membrane time constant—exceeds 10 milliseconds. If you issue in time for the eye to course of action visible details and for the muscle groups to generate the force needed to go, there is certainly truly only time for a few, perhaps four, layers of neurons, in sequence, to include up their inputs and go on details

Could I build a neural network that will work like the dragonfly interception process? I also wondered about takes advantage of for this sort of a neural-influenced interception process. Remaining at Sandia, I straight away regarded defense applications, this sort of as missile defense, imagining missiles of the long run with onboard devices created to promptly estimate interception trajectories devoid of influencing a missile’s weight or electrical power intake. But there are civilian applications as perfectly.

For case in point, the algorithms that control self-driving vehicles could be designed more productive, no lengthier requiring a trunkful of computing tools. If a dragonfly-influenced process can complete the calculations to plot an interception trajectory, probably autonomous drones could use it to

stay away from collisions. And if a computer system could be designed the same measurement as a dragonfly brain (about six cubic millimeters), probably insect repellent and mosquito netting will just one working day grow to be a issue of the earlier, changed by little insect-zapping drones!

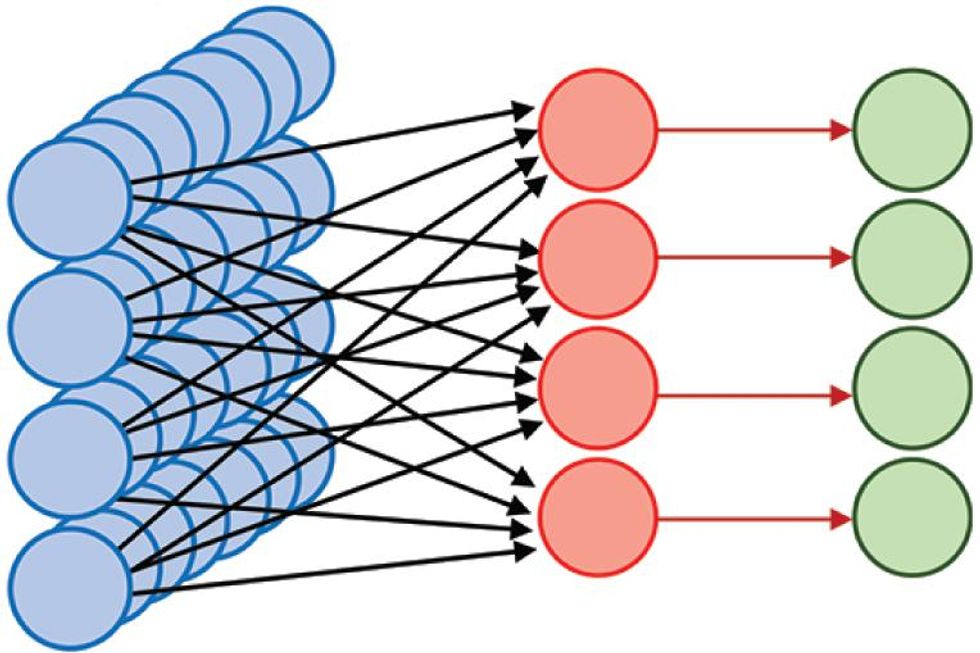

To begin to solution these thoughts, I designed a basic neural network to stand in for the dragonfly’s anxious process and utilized it to estimate the turns that a dragonfly makes to capture prey. My a few-layer neural network exists as a program simulation. In the beginning, I labored in Matlab simply just mainly because that was the coding natural environment I was by now making use of. I have considering the fact that ported the design to Python.

Mainly because dragonflies have to see their prey to capture it, I started by simulating a simplified variation of the dragonfly’s eyes, capturing the least element essential for tracking prey. While dragonflies have two eyes, it can be frequently recognized that they do not use stereoscopic depth perception to estimate distance to their prey. In my design, I did not design equally eyes. Nor did I check out to match the resolution of

a dragonfly eye. In its place, the initially layer of the neural network includes 441 neurons that represent input from the eyes, every single describing a precise region of the visible field—these regions are tiled to kind a 21-by-21-neuron array that addresses the dragonfly’s field of view. As the dragonfly turns, the place of the prey’s image in the dragonfly’s field of view changes. The dragonfly calculates turns essential to align the prey’s image with just one (or a few, if the prey is significant enough) of these “eye” neurons. A next set of 441 neurons, also in the initially layer of the network, tells the dragonfly which eye neurons should be aligned with the prey’s image, that is, where by the prey should be in just its field of view.

The design dragonfly engages its prey.

Processing—the calculations that just take input describing the motion of an item throughout the field of eyesight and transform it into guidance about which path the dragonfly desires to turn—happens involving the initially and 3rd layers of my artificial neural network. In this next layer, I utilized an array of 194,481 (214) neurons, probably considerably greater than the number of neurons utilized by a dragonfly for this endeavor. I precalculated the weights of the connections involving all the neurons into the network. While these weights could be learned with enough time, there is an advantage to “understanding” through evolution and preprogrammed neural network architectures. As soon as it will come out of its nymph phase as a winged grownup (technically referred to as a teneral), the dragonfly does not have a mother or father to feed it or demonstrate it how to hunt. The dragonfly is in a susceptible point out and getting utilized to a new body—it would be disadvantageous to have to figure out a looking technique at the same time. I set the weights of the network to permit the design dragonfly to estimate the right turns to intercept its prey from incoming visible details. What turns are those people? Perfectly, if a dragonfly desires to catch a mosquito that is crossing its route, it cannot just goal at the mosquito. To borrow from what hockey player Wayne Gretsky as soon as mentioned about pucks, the dragonfly has to goal for where by the mosquito is going to be. You could consider that adhering to Gretsky’s advice would require a elaborate algorithm, but in truth the technique is very basic: All the dragonfly desires to do is to maintain a frequent angle involving its line of sight with its lunch and a fixed reference path.

Visitors who have any working experience piloting boats will recognize why that is. They know to get fearful when the angle involving the line of sight to an additional boat and a reference path (for case in point because of north) remains frequent, mainly because they are on a collision course. Mariners have lengthy avoided steering this sort of a course, acknowledged as parallel navigation, to stay away from collisions

Translated to dragonflies, which

want to collide with their prey, the prescription is basic: maintain the line of sight to your prey frequent relative to some exterior reference. Nonetheless, this endeavor is not always trivial for a dragonfly as it swoops and turns, gathering its meals. The dragonfly does not have an inside gyroscope (that we know of) that will maintain a frequent orientation and provide a reference irrespective of how the dragonfly turns. Nor does it have a magnetic compass that will always place north. In my simplified simulation of dragonfly looking, the dragonfly turns to align the prey’s image with a precise place on its eye, but it desires to estimate what that place should be.

The 3rd and last layer of my simulated neural network is the motor-command layer. The outputs of the neurons in this layer are significant-level guidance for the dragonfly’s muscle groups, telling the dragonfly in which path to transform. The dragonfly also takes advantage of the output of this layer to predict the outcome of its individual maneuvers on the place of the prey’s image in its field of view and updates that projected place appropriately. This updating enables the dragonfly to hold the line of sight to its prey continual, relative to the exterior earth, as it approaches.

It is achievable that biological dragonflies have evolved additional applications to help with the calculations needed for this prediction. For case in point, dragonflies have specialised sensors that evaluate physique rotations during flight as perfectly as head rotations relative to the body—if these sensors are quickly enough, the dragonfly could estimate the outcome of its movements on the prey’s image specifically from the sensor outputs or use just one method to cross-check out the other. I did not contemplate this possibility in my simulation.

To check this a few-layer neural network, I simulated a dragonfly and its prey, transferring at the same pace through a few-dimensional room. As they do so my modeled neural-network brain “sees” the prey, calculates where by to place to maintain the image of the prey at a frequent angle, and sends the correct guidance to the muscle groups. I was able to demonstrate that this basic design of a dragonfly’s brain can in truth efficiently intercept other bugs, even prey traveling together curved or semi-random trajectories. The simulated dragonfly does not very realize the success rate of the biological dragonfly, but it also does not have all the advantages (for case in point, remarkable traveling pace) for which dragonflies are acknowledged.

A lot more do the job is needed to ascertain whether this neural network is truly incorporating all the strategies of the dragonfly’s brain. Researchers at the Howard Hughes Healthcare Institute’s Janelia Analysis Campus, in Virginia, have developed little backpacks for dragonflies that can evaluate electrical indicators from a dragonfly’s anxious process while it is in flight and transmit these info for assessment. The backpacks are little enough not to distract the dragonfly from the hunt. Equally, neuroscientists can also record indicators from specific neurons in the dragonfly’s brain while the insect is held motionless but designed to consider it can be transferring by presenting it with the correct visible cues, building a dragonfly-scale virtual reality.

Information from these devices enables neuroscientists to validate dragonfly-brain products by comparing their exercise with exercise patterns of biological neurons in an lively dragonfly. While we cannot nonetheless specifically evaluate specific connections involving neurons in the dragonfly brain, I and my collaborators will be able to infer whether the dragonfly’s anxious process is producing calculations related to those people predicted by my artificial neural network. That will help ascertain whether connections in the dragonfly brain resemble my precalculated weights in the neural network. We will inevitably uncover means in which our design differs from the precise dragonfly brain. Most likely these discrepancies will provide clues to the shortcuts that the dragonfly brain can take to pace up its calculations.

This backpack that captures indicators from electrodes inserted in a dragonfly’s brain was designed by Anthony Leonardo, a group chief at Janelia Analysis Campus.Anthony Leonardo/Janelia Analysis Campus/HHMI

Dragonflies could also educate us how to carry out “attention” on a computer system. You probably know what it feels like when your brain is at full attention, completely in the zone, concentrated on just one endeavor to the place that other distractions seem to be to fade away. A dragonfly can likewise focus its attention. Its anxious process turns up the quantity on responses to unique, presumably chosen, targets, even when other opportunity prey are obvious in the same field of view. It makes feeling that as soon as a dragonfly has decided to pursue a unique prey, it should improve targets only if it has failed to capture its initially preference. (In other terms, making use of parallel navigation to catch a food is not handy if you are quickly distracted.)

Even if we conclusion up finding that the dragonfly mechanisms for directing attention are much less advanced than those people persons use to focus in the center of a crowded espresso store, it can be achievable that a easier but lower-electrical power system will demonstrate advantageous for upcoming-technology algorithms and computer system devices by offering productive means to discard irrelevant inputs

The advantages of learning the dragonfly brain do not conclusion with new algorithms they also can have an impact on devices style and design. Dragonfly eyes are quickly, operating at the equivalent of two hundred frames per next: Which is various periods the pace of human eyesight. But their spatial resolution is fairly bad, probably just a hundredth of that of the human eye. Comprehension how the dragonfly hunts so proficiently, irrespective of its restricted sensing capabilities, can propose means of planning more productive devices. Working with the missile-defense trouble, the dragonfly case in point implies that our antimissile devices with quickly optical sensing could require much less spatial resolution to strike a goal.

The dragonfly isn’t really the only insect that could notify neural-influenced computer system style and design today. Monarch butterflies migrate incredibly lengthy distances, making use of some innate instinct to begin their journeys at the correct time of yr and to head in the proper path. We know that monarchs count on the situation of the sunshine, but navigating by the sunshine calls for keeping track of the time of working day. If you are a butterfly heading south, you would want the sunshine on your left in the morning but on your proper in the afternoon. So, to set its course, the butterfly brain ought to for that reason study its individual circadian rhythm and incorporate that details with what it is observing.

Other bugs, like the Sahara desert ant, ought to forage for fairly lengthy distances. As soon as a source of sustenance is located, this ant does not simply just retrace its actions back to the nest, probably a circuitous route. In its place it calculates a immediate route back. Mainly because the place of an ant’s food stuff source changes from working day to working day, it ought to be able to keep in mind the route it took on its foraging journey, combining visible details with some inside evaluate of distance traveled, and then

estimate its return route from those people recollections.

While no person is familiar with what neural circuits in the desert ant complete this endeavor, scientists at the Janelia Analysis Campus have discovered neural circuits that permit the fruit fly to

self-orient making use of visible landmarks. The desert ant and monarch butterfly probably use related mechanisms. This kind of neural circuits could just one working day demonstrate handy in, say, very low-electrical power drones.

And what if the effectiveness of insect-influenced computation is this sort of that tens of millions of cases of these specialised components can be run in parallel to assist more potent info processing or machine understanding? Could the upcoming AlphaZero include tens of millions of antlike foraging architectures to refine its game playing? Most likely bugs will encourage a new technology of personal computers that search really unique from what we have today. A little army of dragonfly-interception-like algorithms could be utilized to control transferring pieces of an amusement park experience, guaranteeing that specific vehicles do not collide (considerably like pilots steering their boats) even in the midst of a complex but thrilling dance.

No just one is familiar with what the upcoming technology of personal computers will search like, whether they will be section-cyborg companions or centralized methods considerably like Isaac Asimov’s Multivac. Furthermore, no just one can explain to what the ideal route to building these platforms will entail. While scientists developed early neural networks drawing inspiration from the human brain, modern artificial neural networks typically count on decidedly unbrainlike calculations. Researching the calculations of specific neurons in biological neural circuits—currently only specifically achievable in nonhuman systems—may have more to educate us. Insects, seemingly basic but typically astonishing in what they can do, have considerably to lead to the development of upcoming-technology personal computers, primarily as neuroscience investigation carries on to drive toward a further comprehending of how biological neural circuits do the job.

So upcoming time you see an insect accomplishing anything intelligent, visualize the effects on your day-to-day lifestyle if you could have the fantastic effectiveness of a little army of little dragonfly, butterfly, or ant brains at your disposal. Maybe personal computers of the long run will give new meaning to the phrase “hive head,” with swarms of highly specialised but really productive minuscule processors, able to be reconfigured and deployed based on the endeavor at hand. With the developments getting designed in neuroscience today, this seeming fantasy could be closer to reality than you consider.

This posting appears in the August 2021 print challenge as “Classes From a Dragonfly’s Mind.”