Solar Power from Space? Caltech’s $100 Million Gambit

Hunting to these specialized anxious programs as a product for synthetic intelligence might confirm just as useful, if not a lot more so, than researching the human mind. Take into consideration the brains of all those ants in your pantry. Every has some 250,000 neurons. Larger bugs have nearer to 1 million. In my investigate at Sandia National Laboratories in Albuquerque, I research the brains of a person of these larger bugs, the dragonfly. I and my colleagues at Sandia, a national-security laboratory, hope to acquire gain of these insects’ specializations to style and design computing programs optimized for tasks like intercepting an incoming missile or next an odor plume. By harnessing the pace, simplicity, and performance of the dragonfly anxious process, we goal to style and design desktops that execute these features quicker and at a fraction of the power that standard programs eat.

Hunting to a dragonfly as a harbinger of long run personal computer programs might look counterintuitive. The developments in synthetic intelligence and equipment mastering that make information are generally algorithms that mimic human intelligence or even surpass people’s abilities. Neural networks can by now execute as well—if not better—than persons at some precise tasks, these as detecting cancer in health care scans. And the prospective of these neural networks stretches much past visible processing. The personal computer method AlphaZero, trained by self-engage in, is the ideal Go participant in the entire world. Its sibling AI, AlphaStar, ranks among the the ideal Starcraft II gamers.

These feats, nevertheless, come at a cost. Creating these sophisticated programs needs large quantities of processing power, usually available only to pick establishments with the speediest supercomputers and the resources to support them. And the electricity cost is off-placing.

Modern estimates propose that the carbon emissions resulting from creating and instruction a normal-language processing algorithm are higher than all those generated by 4 vehicles about their lifetimes.

It usually takes the dragonfly only about fifty milliseconds to start out to answer to a prey’s maneuver. If we presume 10 ms for cells in the eye to detect and transmit information and facts about the prey, and a further 5 ms for muscle groups to get started producing force, this leaves only 35 ms for the neural circuitry to make its calculations. Given that it generally usually takes a single neuron at minimum 10 ms to integrate inputs, the underlying neural network can be at minimum a few layers deep.

But does an synthetic neural network actually want to be significant and complicated to be beneficial? I believe that it does not. To reap the rewards of neural-motivated desktops in the close to expression, we must strike a equilibrium amongst simplicity and sophistication.

Which delivers me again to the dragonfly, an animal with a mind that might deliver exactly the correct equilibrium for specified applications.

If you have at any time encountered a dragonfly, you by now know how speedy these stunning creatures can zoom, and you have witnessed their incredible agility in the air. It’s possible a lot less evident from everyday observation is their great hunting ability: Dragonflies successfully seize up to ninety five per cent of the prey they go after, ingesting hundreds of mosquitoes in a day.

The actual physical prowess of the dragonfly has absolutely not absent unnoticed. For a long time, U.S. businesses have experimented with employing dragonfly-motivated layouts for surveillance drones. Now it is time to change our consideration to the mind that controls this little hunting equipment.

While dragonflies might not be ready to engage in strategic video games like Go, a dragonfly does reveal a variety of technique in the way it aims ahead of its prey’s current spot to intercept its dinner. This usually takes calculations executed really fast—it generally usually takes a dragonfly just fifty milliseconds to get started turning in response to a prey’s maneuver. It does this though tracking the angle amongst its head and its overall body, so that it is aware which wings to flap quicker to change ahead of the prey. And it also tracks its possess movements, for the reason that as the dragonfly turns, the prey will also seem to shift.

The product dragonfly reorients in response to the prey’s turning. The scaled-down black circle is the dragonfly’s head, held at its initial placement. The stable black line implies the course of the dragonfly’s flight the dotted blue traces are the aircraft of the product dragonfly’s eye. The pink star is the prey’s placement relative to the dragonfly, with the dotted pink line indicating the dragonfly’s line of sight.

So the dragonfly’s mind is performing a impressive feat, offered that the time essential for a single neuron to insert up all its inputs—called its membrane time constant—exceeds 10 milliseconds. If you variable in time for the eye to system visible information and facts and for the muscle groups to develop the force essential to shift, there’s actually only time for a few, maybe 4, layers of neurons, in sequence, to insert up their inputs and pass on information and facts

Could I establish a neural network that is effective like the dragonfly interception process? I also questioned about makes use of for these a neural-motivated interception process. Being at Sandia, I promptly considered defense applications, these as missile defense, imagining missiles of the long run with onboard programs intended to promptly work out interception trajectories without affecting a missile’s fat or power use. But there are civilian applications as effectively.

For instance, the algorithms that regulate self-driving vehicles may well be manufactured a lot more successful, no for a longer period requiring a trunkful of computing tools. If a dragonfly-motivated process can execute the calculations to plot an interception trajectory, maybe autonomous drones could use it to

avoid collisions. And if a personal computer could be manufactured the identical dimension as a dragonfly mind (about 6 cubic millimeters), maybe insect repellent and mosquito netting will a person day come to be a point of the earlier, changed by little insect-zapping drones!

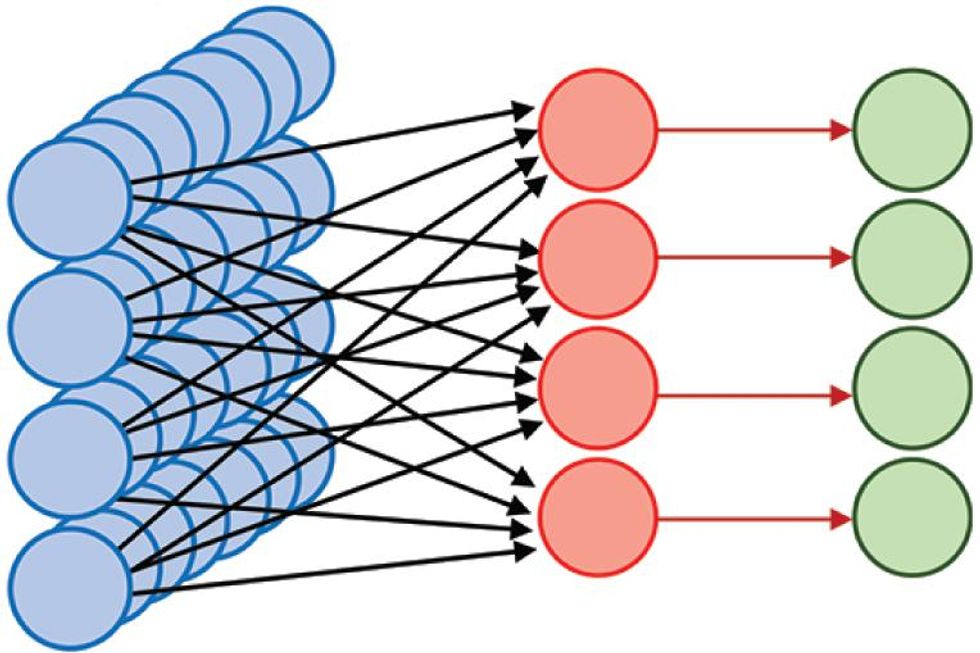

To start out to remedy these inquiries, I made a easy neural network to stand in for the dragonfly’s anxious process and used it to work out the turns that a dragonfly helps make to seize prey. My a few-layer neural network exists as a program simulation. To begin with, I labored in Matlab merely for the reason that that was the coding surroundings I was by now employing. I have because ported the product to Python.

Since dragonflies have to see their prey to seize it, I started off by simulating a simplified version of the dragonfly’s eyes, capturing the bare minimum element essential for tracking prey. Despite the fact that dragonflies have two eyes, it truly is usually approved that they do not use stereoscopic depth notion to estimate distance to their prey. In my product, I did not product each eyes. Nor did I attempt to match the resolution of

a dragonfly eye. In its place, the to start with layer of the neural network involves 441 neurons that depict enter from the eyes, just about every describing a precise location of the visible field—these areas are tiled to variety a 21-by-21-neuron array that covers the dragonfly’s field of see. As the dragonfly turns, the spot of the prey’s impression in the dragonfly’s field of see changes. The dragonfly calculates turns essential to align the prey’s impression with a person (or a number of, if the prey is significant sufficient) of these “eye” neurons. A next established of 441 neurons, also in the to start with layer of the network, tells the dragonfly which eye neurons should be aligned with the prey’s impression, that is, where the prey should be inside its field of see.

The product dragonfly engages its prey.

Processing—the calculations that acquire enter describing the motion of an item across the field of eyesight and change it into recommendations about which course the dragonfly requirements to turn—happens amongst the to start with and 3rd layers of my synthetic neural network. In this next layer, I used an array of 194,481 (214) neurons, possible considerably larger than the number of neurons used by a dragonfly for this task. I precalculated the weights of the connections amongst all the neurons into the network. While these weights could be realized with sufficient time, there is an gain to “mastering” as a result of evolution and preprogrammed neural network architectures. As soon as it will come out of its nymph stage as a winged adult (technically referred to as a teneral), the dragonfly does not have a parent to feed it or display it how to hunt. The dragonfly is in a vulnerable condition and getting used to a new body—it would be disadvantageous to have to figure out a hunting technique at the identical time. I established the weights of the network to allow the product dragonfly to work out the appropriate turns to intercept its prey from incoming visible information and facts. What turns are all those? Well, if a dragonfly wishes to capture a mosquito that’s crossing its path, it are not able to just goal at the mosquito. To borrow from what hockey participant Wayne Gretsky once reported about pucks, the dragonfly has to goal for where the mosquito is going to be. You may well believe that next Gretsky’s tips would involve a complicated algorithm, but in truth the technique is very easy: All the dragonfly requirements to do is to keep a consistent angle amongst its line of sight with its lunch and a fixed reference course.

Visitors who have any knowledge piloting boats will realize why that is. They know to get fearful when the angle amongst the line of sight to a further boat and a reference course (for instance owing north) remains consistent, for the reason that they are on a collision class. Mariners have long prevented steering these a class, acknowledged as parallel navigation, to avoid collisions

Translated to dragonflies, which

want to collide with their prey, the prescription is easy: keep the line of sight to your prey consistent relative to some exterior reference. On the other hand, this task is not necessarily trivial for a dragonfly as it swoops and turns, amassing its foods. The dragonfly does not have an interior gyroscope (that we know of) that will keep a consistent orientation and deliver a reference irrespective of how the dragonfly turns. Nor does it have a magnetic compass that will usually place north. In my simplified simulation of dragonfly hunting, the dragonfly turns to align the prey’s impression with a precise spot on its eye, but it requirements to work out what that spot should be.

The 3rd and final layer of my simulated neural network is the motor-command layer. The outputs of the neurons in this layer are substantial-amount recommendations for the dragonfly’s muscle groups, telling the dragonfly in which course to change. The dragonfly also makes use of the output of this layer to predict the impact of its possess maneuvers on the spot of the prey’s impression in its field of see and updates that projected spot accordingly. This updating permits the dragonfly to hold the line of sight to its prey steady, relative to the exterior entire world, as it methods.

It is achievable that organic dragonflies have progressed additional instruments to help with the calculations essential for this prediction. For instance, dragonflies have specialized sensors that measure overall body rotations in the course of flight as effectively as head rotations relative to the body—if these sensors are speedy sufficient, the dragonfly could work out the impact of its movements on the prey’s impression directly from the sensor outputs or use a person approach to cross-test the other. I did not take into consideration this possibility in my simulation.

To test this a few-layer neural network, I simulated a dragonfly and its prey, going at the identical pace as a result of a few-dimensional room. As they do so my modeled neural-network mind “sees” the prey, calculates where to place to keep the impression of the prey at a consistent angle, and sends the acceptable recommendations to the muscle groups. I was ready to display that this easy product of a dragonfly’s mind can in truth successfully intercept other bugs, even prey traveling alongside curved or semi-random trajectories. The simulated dragonfly does not very accomplish the achievement rate of the organic dragonfly, but it also does not have all the advantages (for instance, remarkable flying pace) for which dragonflies are acknowledged.

Much more do the job is essential to decide no matter if this neural network is actually incorporating all the secrets of the dragonfly’s mind. Scientists at the Howard Hughes Professional medical Institute’s Janelia Investigate Campus, in Virginia, have made little backpacks for dragonflies that can measure electrical indicators from a dragonfly’s anxious process though it is in flight and transmit these information for assessment. The backpacks are tiny sufficient not to distract the dragonfly from the hunt. Likewise, neuroscientists can also history indicators from personal neurons in the dragonfly’s mind though the insect is held motionless but manufactured to believe it truly is going by presenting it with the acceptable visible cues, generating a dragonfly-scale digital fact.

Details from these programs permits neuroscientists to validate dragonfly-mind styles by comparing their exercise with exercise styles of organic neurons in an energetic dragonfly. While we simply cannot nonetheless directly measure personal connections amongst neurons in the dragonfly mind, I and my collaborators will be ready to infer no matter if the dragonfly’s anxious process is making calculations identical to all those predicted by my synthetic neural network. That will help decide no matter if connections in the dragonfly mind resemble my precalculated weights in the neural network. We will inevitably discover techniques in which our product differs from the genuine dragonfly mind. Potentially these distinctions will deliver clues to the shortcuts that the dragonfly mind usually takes to pace up its calculations.

This backpack that captures indicators from electrodes inserted in a dragonfly’s mind was made by Anthony Leonardo, a group leader at Janelia Investigate Campus.Anthony Leonardo/Janelia Investigate Campus/HHMI

Dragonflies could also instruct us how to put into action “consideration” on a personal computer. You possible know what it feels like when your mind is at whole consideration, completely in the zone, focused on a person task to the place that other distractions look to fade absent. A dragonfly can likewise target its consideration. Its anxious process turns up the quantity on responses to specific, presumably picked, targets, even when other prospective prey are visible in the identical field of see. It helps make perception that once a dragonfly has determined to go after a specific prey, it should change targets only if it has unsuccessful to seize its to start with preference. (In other terms, employing parallel navigation to capture a food is not beneficial if you are simply distracted.)

Even if we close up finding that the dragonfly mechanisms for directing consideration are a lot less sophisticated than all those persons use to target in the center of a crowded espresso shop, it truly is achievable that a simpler but lessen-power mechanism will confirm advantageous for following-technology algorithms and personal computer programs by giving successful techniques to discard irrelevant inputs

The advantages of researching the dragonfly mind do not close with new algorithms they also can have an affect on programs style and design. Dragonfly eyes are speedy, working at the equivalent of 200 frames for each next: That’s various periods the pace of human eyesight. But their spatial resolution is rather lousy, maybe just a hundredth of that of the human eye. Comprehending how the dragonfly hunts so proficiently, even with its confined sensing abilities, can propose techniques of coming up with a lot more successful programs. Using the missile-defense trouble, the dragonfly instance indicates that our antimissile programs with speedy optical sensing could involve a lot less spatial resolution to strike a focus on.

The dragonfly just isn’t the only insect that could tell neural-motivated personal computer style and design right now. Monarch butterflies migrate exceptionally long distances, employing some innate instinct to start out their journeys at the acceptable time of year and to head in the correct course. We know that monarchs depend on the placement of the solar, but navigating by the solar needs trying to keep track of the time of day. If you are a butterfly heading south, you would want the solar on your still left in the early morning but on your correct in the afternoon. So, to established its class, the butterfly mind must therefore read through its possess circadian rhythm and incorporate that information and facts with what it is observing.

Other bugs, like the Sahara desert ant, must forage for rather long distances. As soon as a resource of sustenance is discovered, this ant does not merely retrace its methods again to the nest, possible a circuitous path. In its place it calculates a direct route again. Since the spot of an ant’s food resource changes from day to day, it must be ready to bear in mind the path it took on its foraging journey, combining visible information and facts with some interior measure of distance traveled, and then

work out its return route from all those reminiscences.

While no person is aware what neural circuits in the desert ant execute this task, researchers at the Janelia Investigate Campus have determined neural circuits that allow the fruit fly to

self-orient employing visible landmarks. The desert ant and monarch butterfly possible use identical mechanisms. These neural circuits may well a person day confirm beneficial in, say, reduced-power drones.

And what if the performance of insect-motivated computation is these that hundreds of thousands of circumstances of these specialized factors can be operate in parallel to support a lot more powerful information processing or equipment mastering? Could the following AlphaZero integrate hundreds of thousands of antlike foraging architectures to refine its activity taking part in? Potentially bugs will encourage a new technology of desktops that glance very various from what we have right now. A tiny army of dragonfly-interception-like algorithms could be used to regulate going pieces of an amusement park experience, making certain that personal vehicles do not collide (considerably like pilots steering their boats) even in the midst of a difficult but thrilling dance.

No a person is aware what the following technology of desktops will glance like, no matter if they will be element-cyborg companions or centralized resources considerably like Isaac Asimov’s Multivac. Also, no a person can tell what the ideal path to creating these platforms will entail. While researchers made early neural networks drawing inspiration from the human mind, present-day synthetic neural networks often depend on decidedly unbrainlike calculations. Finding out the calculations of personal neurons in organic neural circuits—currently only directly achievable in nonhuman systems—may have a lot more to instruct us. Bugs, apparently easy but often astonishing in what they can do, have considerably to contribute to the improvement of following-technology desktops, in particular as neuroscience investigate continues to drive towards a deeper knowing of how organic neural circuits do the job.

So following time you see an insect undertaking a thing intelligent, think about the influence on your every day daily life if you could have the excellent performance of a tiny army of little dragonfly, butterfly, or ant brains at your disposal. It’s possible desktops of the long run will give new that means to the expression “hive brain,” with swarms of very specialized but really successful minuscule processors, ready to be reconfigured and deployed dependent on the task at hand. With the advances being manufactured in neuroscience right now, this seeming fantasy might be nearer to fact than you believe.

This article appears in the August 2021 print challenge as “Lessons From a Dragonfly’s Mind.”