Review: DataRobot aces automated machine learning

Facts science is very little if not tiresome, in normal apply. The original tedium is composed of finding data applicable to the challenge you’re seeking to model, cleansing it, and finding or constructing a very good established of options. The future tedium is a issue of attempting to teach every single achievable equipment studying and deep studying model to your data, and buying the most effective several to tune.

Then you require to understand the designs properly ample to clarify them this is in particular significant when the model will be assisting to make life-altering conclusions, and when conclusions may possibly be reviewed by regulators. Lastly, you require to deploy the most effective model (usually the a single with the most effective precision and appropriate prediction time), observe it in production, and strengthen (retrain) the model as the data drifts in excess of time.

AutoML, i.e. automated equipment studying, can pace up these processes radically, often from months to several hours, and can also decrease the human necessities from expert Ph.D. data scientists to a lot less-proficient data scientists and even small business analysts. DataRobot was a single of the earliest suppliers of AutoML solutions, despite the fact that they often connect with it Organization AI and normally bundle the software program with consulting from a educated data scientist. DataRobot did not cover the entire equipment studying lifecycle originally, but in excess of the years they have acquired other companies and built-in their solutions to fill in the gaps.

As demonstrated in the listing below, DataRobot has divided the AutoML system into ten steps. Whilst DataRobot statements to be the only vendor to cover all ten steps, other suppliers could possibly beg to vary, or present their own providers as well as a single or additional 3rd-bash providers as a “best of breed” program. Opponents to DataRobot include things like (in alphabetical purchase) AWS, Google (as well as Trifacta for data preparation), H2O.ai, IBM, MathWorks, Microsoft, and SAS.

The ten steps of automatic equipment studying, in accordance to DataRobot:

- Facts identification

- Facts preparation

- Feature engineering

- Algorithm range

- Algorithm variety

- Teaching and tuning

- Head-to-head model competitions

- Human-welcoming insights

- Uncomplicated deployment

- Product checking and management

DataRobot system overview

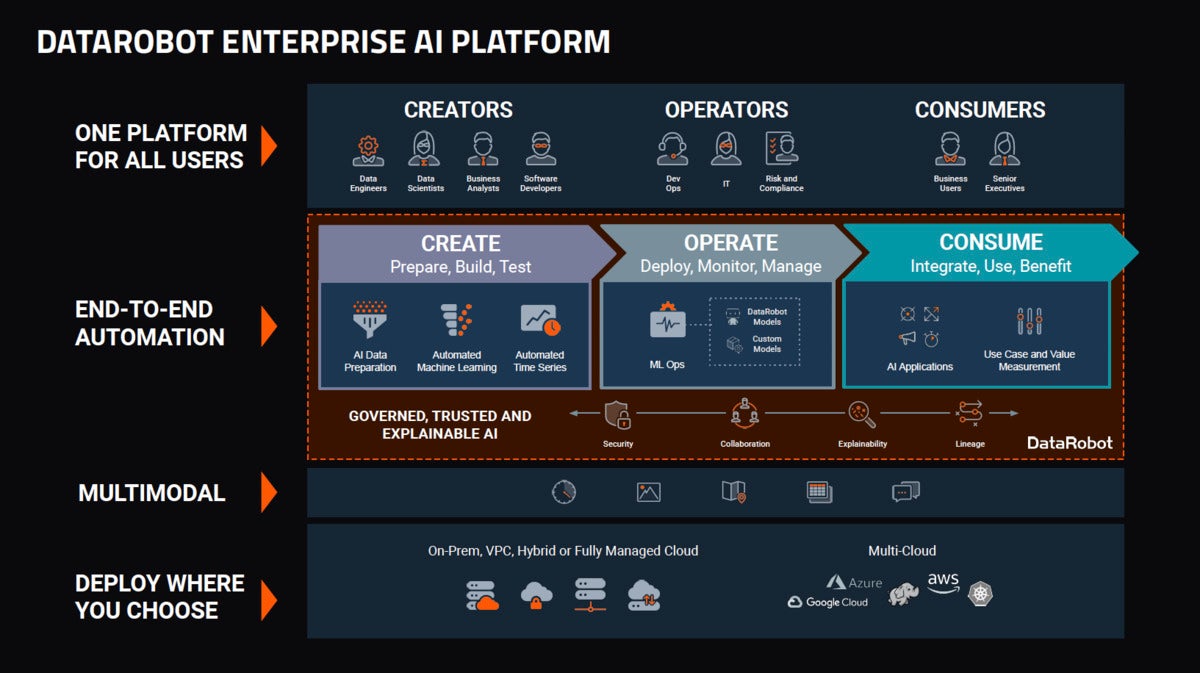

As you can see in the slide below, the DataRobot system tries to address the requires of a wide variety of personas, automate the full equipment studying lifecycle, deal with the problems of model explainability and governance, deal with all types of data, and deploy pretty substantially wherever. It mostly succeeds.

DataRobot helps data engineers with its AI Catalog and Paxata data prep. It helps data scientists principally with its AutoML and automatic time series, but also with its additional sophisticated solutions for designs and its Dependable AI. It helps small business analysts with its quick-to-use interface. And it helps software program developers with its means to integrate equipment studying designs with production systems. DevOps and IT advantage from DataRobot MLOps (acquired in 2019 from ParallelM), and threat and compliance officers can advantage from its Dependable AI. Business enterprise people and executives advantage from greater and speedier model developing and from data-driven final decision building.

End-to-stop automation speeds up the full equipment studying system and also tends to make greater designs. By swiftly education many designs in parallel and employing a significant library of designs, DataRobot can often locate a substantially greater model than proficient data scientists education a single model at a time.

In the row marked multimodal in the diagram below, there are 5 icons. At 1st they puzzled me, so I questioned what they imply. In essence, DataRobot has designs that can handle time series, photos, geographic info, tabular data, and textual content. The stunning bit is that it can combine all of those people data types in a one model.

DataRobot provides you a decision of deployment spots. It will run on a Linux server or Linux cluster on-premises, in a cloud VPC, in a hybrid cloud, or in a absolutely managed cloud. It supports Amazon World wide web Products and services, Microsoft Azure, or Google Cloud Platform, as properly as Hadoop and Kubernetes.

DataRobot system diagram. Various of the options have been extra to the system via acquisitions, which include data preparation and MLOps.

Paxata data prep

DataRobot acquired self-support data preparation company Paxata in December 2019. Paxata is now built-in with DataRobot’s AI Catalog and feels like part of the DataRobot item, despite the fact that you can however purchase it as a standalone item if you desire.

Paxata has a few capabilities. 1st, it permits you to import datasets. Next, it lets you examine, thoroughly clean, combine, and affliction the data. And 3rd, it permits you to publish organized data as an AnswerSet. Every single stage you complete in Paxata creates a model, so that you can constantly go on to get the job done on the data.

Facts cleansing in Paxata incorporates standardizing values, eradicating duplicates, finding and correcting problems, and additional. You can shape your data employing instruments these types of as pivot, transpose, group by, and additional.

The screenshot below shows a serious estate dataset that has a dozen Paxata processing steps. It commences with a home selling price tabular dataset then it adds exterior and interior photos, eliminates needless columns and undesirable rows, and adds ZIP code geospatial info. This screenshot is from the House Listings demo.

IDG

IDGPaxata permits the person to construct AnswerSets from datasets a single stage at a time. The Paxata instruments all have a GUI, despite the fact that the Compute instrument lets the person enter simple formulas or construct sophisticated formulas employing columns and capabilities.

DataRobot automatic equipment studying

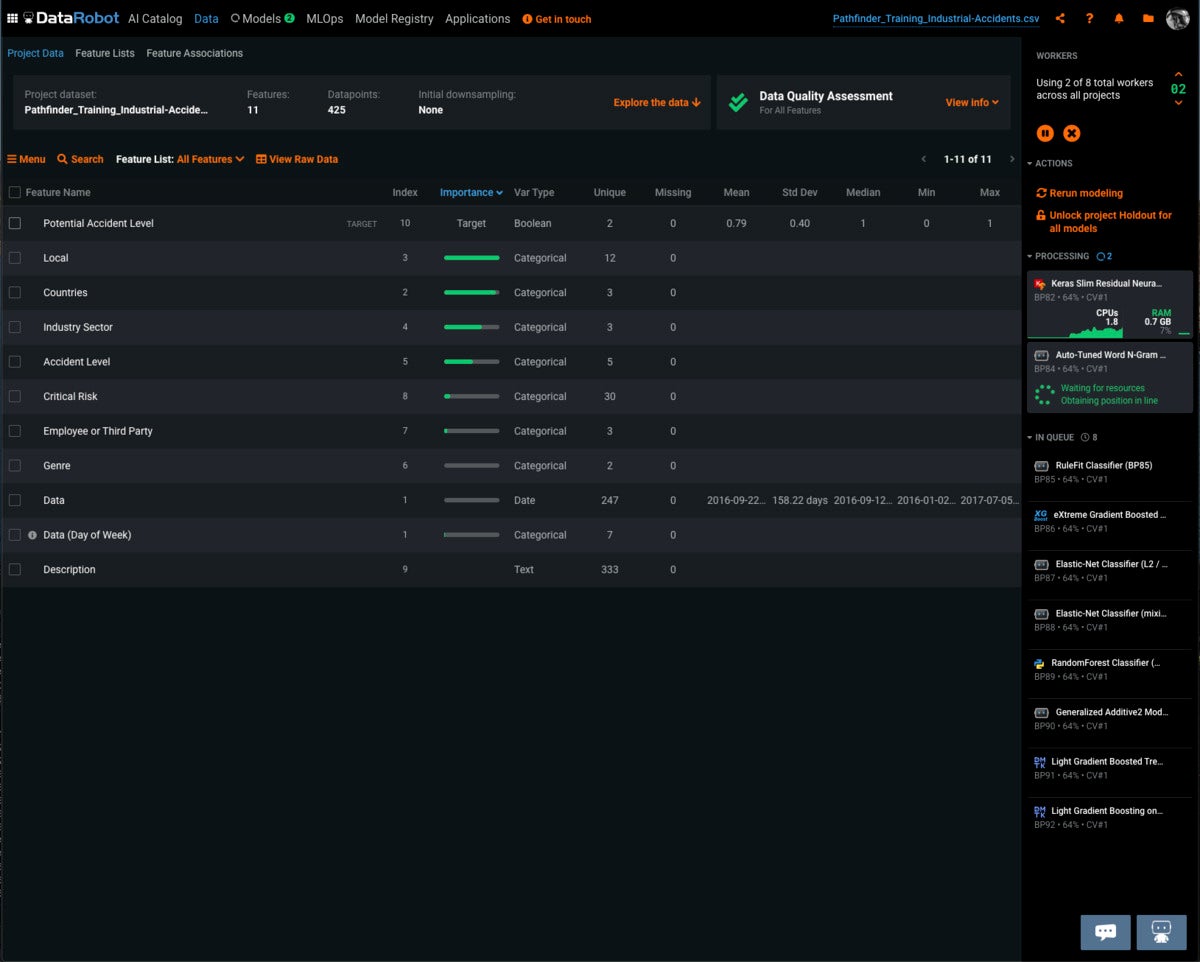

Generally, DataRobot AutoML performs by likely via a few of exploratory data evaluation (EDA) phases, figuring out educational options, engineering new options (in particular from day types), then seeking a whole lot of designs with little quantities of data.

EDA stage 1 runs on up to 500MB of your dataset and provides summary figures, as properly as checking for outliers, inliers, excessive zeroes, and disguised missing values. When you decide on a focus on and strike run, DataRobot “searches via millions of achievable combinations of algorithms, preprocessing steps, options, transformations, and tuning parameters. It then uses supervised studying algorithms to assess the data and discover (obvious) predictive associations.”

DataRobot autopilot manner commences with 16{d11068cee6a5c14bc1230e191cd2ec553067ecb641ed9b4e647acef6cc316fdd} of the data for all suitable designs, 32{d11068cee6a5c14bc1230e191cd2ec553067ecb641ed9b4e647acef6cc316fdd} of the data for the best 16 designs, and 64{d11068cee6a5c14bc1230e191cd2ec553067ecb641ed9b4e647acef6cc316fdd} of the data for the best eight designs. All effects are displayed on the leaderboard. Quick manner runs a subset of designs on 32{d11068cee6a5c14bc1230e191cd2ec553067ecb641ed9b4e647acef6cc316fdd} and 64{d11068cee6a5c14bc1230e191cd2ec553067ecb641ed9b4e647acef6cc316fdd} of the data. Manual manner presents you total management in excess of which designs to execute, which include certain designs from the repository.

IDG

IDGDataRobot AutoML in action. The designs becoming educated are at the ideal, along with the share of the data becoming applied for education each and every model.

DataRobot time-aware modeling

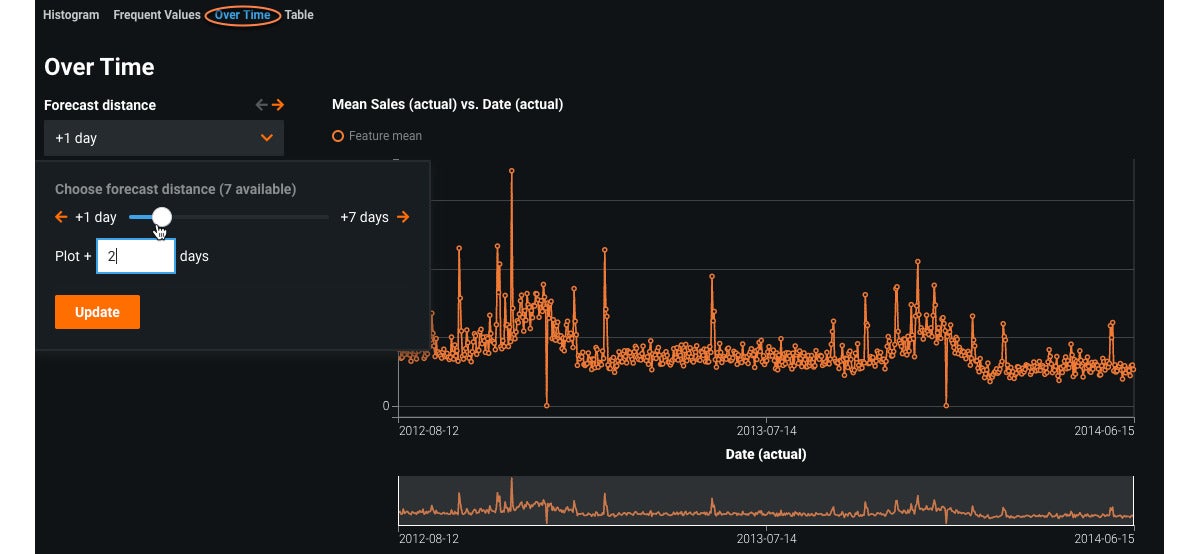

DataRobot can do two types of time-aware modeling if you have day/time options in your dataset. You should use out-of-time validation (OTV) when your data is time-applicable but you are not forecasting (in its place, you are predicting the focus on value on each and every person row). Use OTV if you have one celebration data, these types of as individual intake or mortgage defaults.

You can use time series when you want to forecast numerous future values of the focus on (for illustration, predicting gross sales for each and every day future week). Use time series to extrapolate future values in a continual sequence.

In basic, it has been tough for equipment studying designs to outperform conventional statistical designs for time series prediction, these types of as ARIMA. DataRobot’s time series functionality performs by encoding time-sensitive elements as options that can lead to normal equipment studying designs. It adds columns to each and every row for examples of predicting diverse distances into the future, and columns of lagged options and rolling figures for predicting that new length.

IDG

IDGValues in excess of time graph for time-related data. This helps to figure out tendencies, weekly patterns, and seasonal patterns.

DataRobot Visible AI

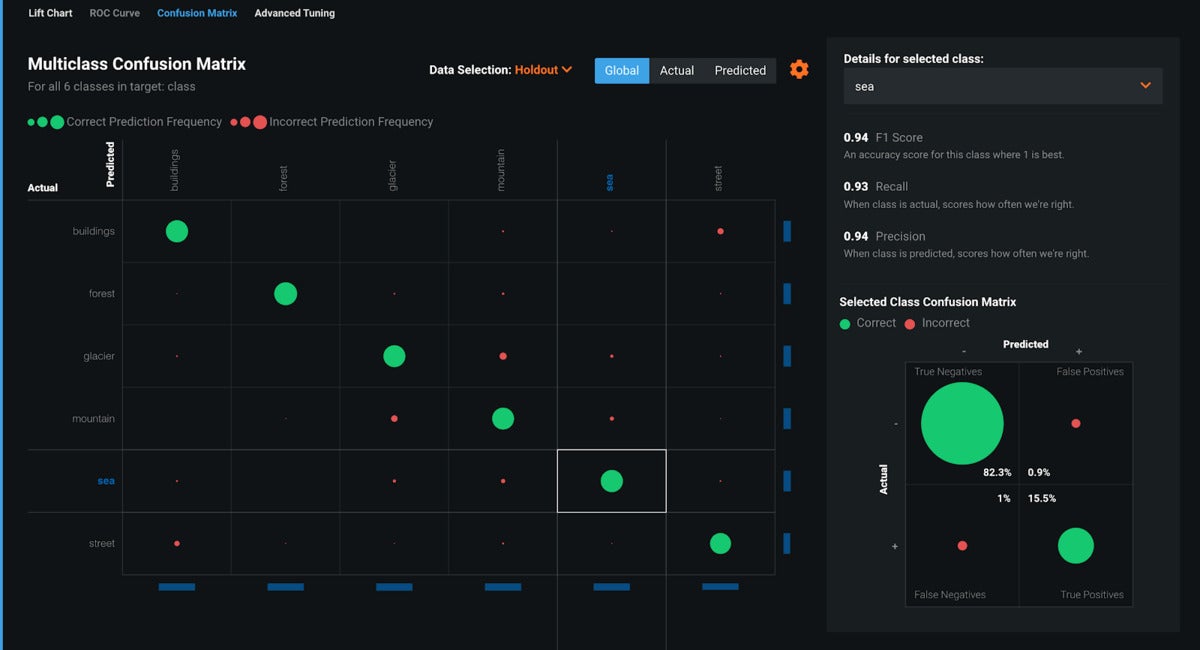

In April 2020 DataRobot extra image processing to its arsenal. Visual AI permits you to construct binary and multi-course classification and regression designs with photos. You can use it to construct fully new image-based mostly designs or to insert photos as new options to present designs.

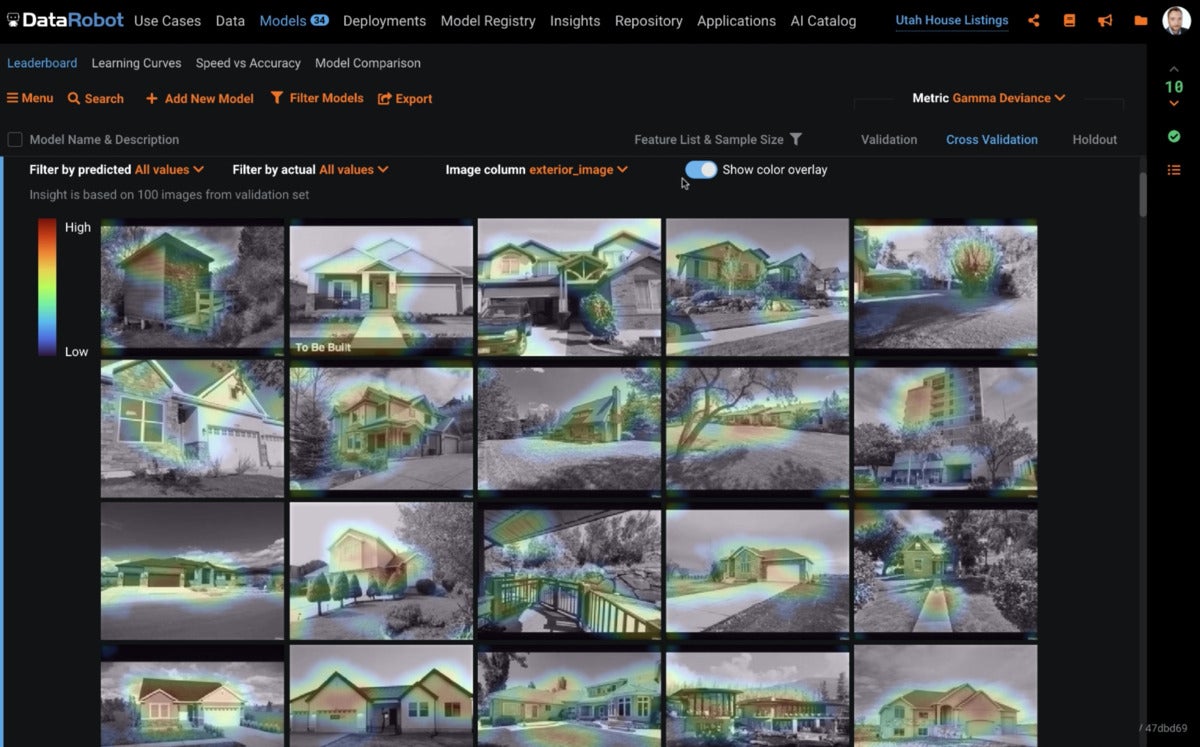

Visible AI uses pre-educated neural networks, and a few new designs: Neural Community Visualizer, Graphic Embeddings, and Activation Maps. As constantly, DataRobot can combine its designs for diverse subject types, so categorized photos can insert precision to designs that also use numeric, textual content, and geospatial data. For illustration, an image of a kitchen that is modern-day and roomy and has new-hunting, higher-stop appliances could possibly end result in a residence-pricing model rising its estimate of the sale selling price.

There is no require to provision GPUs for Visible AI. In contrast to the system of education image designs from scratch, Visible AI’s pre-educated neural networks get the job done fantastic on CPUs, and really do not even choose very long.

IDG

IDGThis multi-course confusion matrix for image classification shows a relatively thoroughly clean separation, with most of the predictions real positives or real negatives.

IDG

IDGThe colour overlays in these residence exterior photos from the House Listings demo highlight the options the model factored into its sale selling price predictions. These aspects have been put together with other fields, these types of as square footage and variety of bedrooms.

DataRobot Dependable AI

It is quick for an AI model to go off observe, and there are several examples of what not to do in the literature. Contributing aspects include things like outliers in the education data, education data that is not representative of the serious distribution, options that are dependent on other options, also many missing characteristic values, and options that leak the focus on value into the education.

DataRobot has guardrails to detect these circumstances. You can resolve them in the AutoML stage, or if possible in the data prep stage. Guardrails enable you rely on the model additional, but they are not infallible.

Humble AI guidelines allow for DataRobot to detect out of selection or uncertain predictions as they take place, as part of the MLOps deployment. For illustration, a residence value of $one hundred million in Cleveland is unheard-of a prediction in that selection is most likely a oversight. For one more illustration, a predicted likelihood of .five may possibly show uncertainty. There are a few means of responding when humility guidelines hearth: Do very little but hold observe, so that you can later on refine the model employing additional data override the prediction with a “safe” value or return an error.

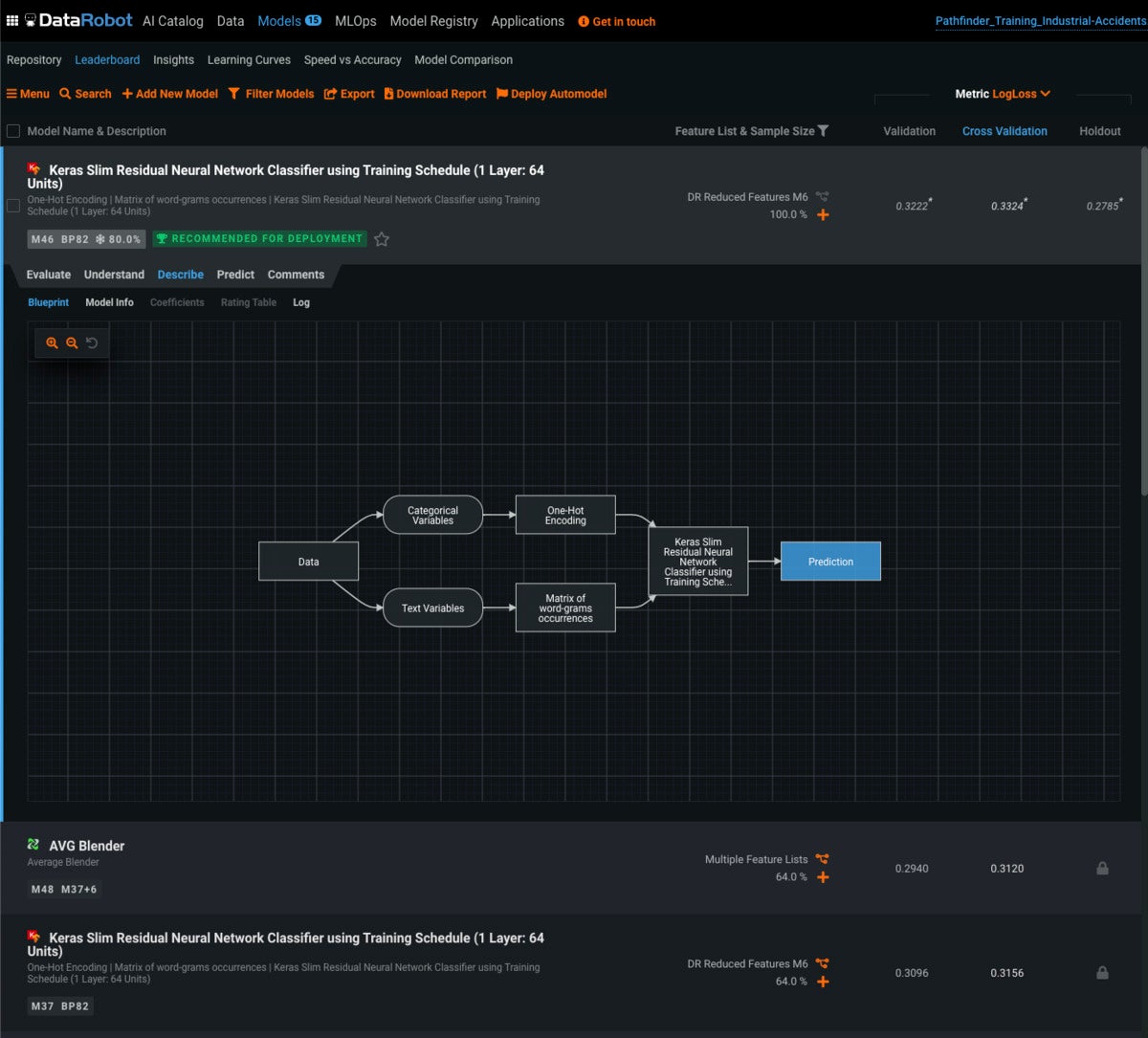

Way too many equipment studying designs lack explainability they are very little additional than black packing containers. Which is often in particular real of AutoML. DataRobot, even so, goes to terrific lengths to clarify its designs. The diagram that follows is relatively simple, as neural community designs go, but you can see the technique of processing textual content and categorical variables in separate branches and then feeding the effects into a neural community.

IDG

IDGBlueprint for an AutoML model. This model processes categorical variables employing a single-hot encoding and textual content variables employing phrase-grams, then aspects them all into a Keras Trim Residual neural community classifier. You can drill into any box to see the parameters and get a url to the applicable documentation.

DataRobot MLOps

Once you have created a very good model you can deploy it as a prediction support. That is not the stop of the story, even so. Around time, circumstances modify. We can see an illustration in the graphs below. Primarily based on these effects, some of the data that flows into the model — elementary faculty spots — requires to be up-to-date, and then the model requires to be retrained and redeployed.

IDG

IDGOn the lookout at the characteristic drift from MLOps tells you when circumstances modify that affect the model’s predictions. Here we see that a new elementary faculty has opened, which normally raises the value of close by homes.

Overall, DataRobot now has an stop-to-stop AutoML suite that requires you from data collecting via model developing to deployment, checking, and management. DataRobot has paid awareness to the pitfalls in AI model developing and offered means to mitigate many of them. Overall, I charge DataRobot very very good, and a worthy competitor to Google, AWS, Microsoft, and H2O.ai. I have not reviewed the equipment studying choices from IBM, MathWorks, or SAS just lately ample to charge them.

I was stunned and amazed to explore that DataRobot can run on CPUs without accelerators and make designs in a several several hours, even when developing neural community designs that include things like image classification. That may possibly give it a slight edge in excess of the 4 opponents I outlined for AutoML, simply because GPUs and TPUs are not affordable.

DataRobot

DataRobot