Disinformation campaigns hit enterprises, too

When it will come to nation-condition disinformation campaigns on social media, U.S. elections and political candidates aren’t the only targets.

Renée DiResta, investigation manager at the Stanford World wide web Observatory, has been learning how disinformation and propaganda unfold across social media platforms, as effectively as mainstream information cycles to develop “malign narratives.” In her keynote address for Black Hat United states 2020, titled “Hacking General public View,” she warned that enterprises are also at risk of nation-condition disinformation campaigns.

“Even though I have focused on condition actors hacking general public opinions on political subject areas, the threat is truly broader,” she reported. “Reputational attacks on organizations are just as quick to execute.”

DiResta began her keynote by describing how advanced persistent threat (APT) groups from Russia and China have waged thriving disinformation campaigns to induce disruption all over elections and geopolitical challenges. These campaigns ordinarily use 1 of 4 primary ambitions: distract the general public from an situation or information story that has a destructive affect on the nation-condition persuade the general public to adopt the nation-state’s view of an situation entrench general public feeling further on a specific situation and divide the general public by amplifying dissenting opinions on both equally sides of an situation.

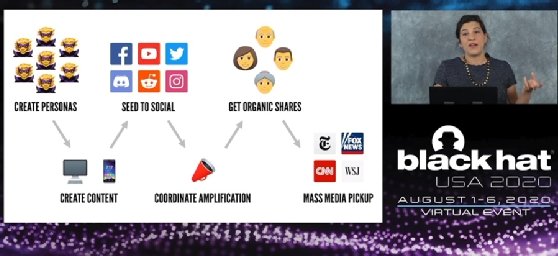

DiResta reported these campaigns typically develop on line personas and groups and even phony resources for information media to develop content material or malign narratives and interact in coordinated seeding and amplification on social media. Examples of these campaigns include the notorious Russian troll farm acknowledged as the World wide web Investigation Company, which aimed to sway general public feeling all over the 2016 U.S. presidential election, as effectively as the “hack-and-leak” assault on the Democratic Countrywide Committee in 2016 by a Russian APT and the subsequent disinformation all over the Guccifer 2. persona.

But in addition to governing administration or political entities, DiResta reported nation-condition threat actors have focused organizations in industries these as agriculture or power in buy to achieve aggressive financial pros or to provide larger geopolitical ambitions. She cited a 2018 congressional report from the Residence Committee on Science, Area and Technologies that explained how Russian condition actors utilized troll accounts to launch reputational attacks on U.S. power organizations around “fracking.”

“You can see those same designs applied to assault the reputations of businesses on line as effectively,” she reported. “Corporations that take a powerful stand on divisive social challenges may locate also by themselves embroiled in social media chatter that is not automatically what is appears to be.”

Danger actors, for case in point, may test to “erode social cohesion” by amplifying present tensions and inflaming those social media conversations. And those murky waters can be tricky for enterprises to navigate, she reported.

“Quite couple of organizations will know in which in the org chart to place duty for comprehending those forms of actions,” DiResta reported. “Just because a great deal of mentions of your brand are going on, that does not automatically imply they’re genuine or inauthentic, so this genuinely sort of falls to the CISO at this level to test to realize when these attacks are focused on companies, when they really should respond, and how they really should feel about them.”

DiResta reported these reputational attacks and disinformation campaigns have to have additional from enterprises than just social media investigation. “We have to have to be carrying out additional crimson teaming,” she reported. “We have to have to be thinking about the social and media ecosystem as a system, proactively envisioning what forms of manipulation are feasible.”

Nevertheless, DiResta reported it can be very tricky to decide how significantly a precise disinformation marketing campaign may have affected general public feeling, whether it can be a political situation or a corporate brand. The Stanford World wide web Observatory maps how disinformation spreads and narratives are produced on media platforms, but she reported additional functions requirements to be performed on their effects.

“We can see how people are reacting to this things but are not able to genuinely see if it improved hearts and minds,” she reported.

Black Hat co-founder Jeff Moss agreed additional investigation is necessary to get a much better cope with on the challenge.

“You will find not ample real investigation becoming formulated and researched to tell policymakers to explain to us what to do about it,” he reported throughout his introduction of DiResta.